Boost Customer Satisfaction with Deep Learning

Introduction

Online businesses receive a large amount of data from digital analytics tools, surveys, feedback forms, and social media interactions. According to Forrester Research between 60 percent and 73 percent of all data within an enterprise goes unused. The goal of this project was to build a machine learning solution that does automatic sentiment analysis for customer reviews. Without any human intervention, millions of customer reviews can be analyzed in minutes. The results of the analysis can be used to establish customer satisfaction KPI. Customer satisfaction KPI can help the management of a company to make better decisions in areas like product development, support, and sales. The current solution helps to utilize customer feedback data in a fully automatic way.

Executive Summary

The current project shows how unstructured user data can be processed by a machine learning application. The whole application was built on AWS Sagemaker, AWS API Gateway, and AWS Lambda. The machine learning pipeline was run on AWS Sagemaker. Other AWS services were used as a Gateway to AWS Sagemaker. Two different machine learning algorithms were tested. The main focus of the project was on testing the Long short-term memory recurrent neural network. As a final proof-of-concept, a serverless web application was deployed to make realtime predictions.

Results

To create a machine learning model a database for information related to films (IMDb) was used. The labeled data was provided by Stanford University. The dataset contains 50,000 positive and negative review examples. XGBoost and Long short-term memory (LSTM) recurrent neural networks were evaluated to create the final model. After the fine-tuning of XGBoost, the accuracy rate was around 84%. This means that from 100 predictions, 84 predictions were right. The XGBoost result was set as a baseline. After training the LSTM neural network, an accuracy rate of 84% was achieved.

Architecture

The project was developed on the AWS Sagemaker platform. The prototyping phase was undertaken inside the Sagemaker notebooks. The whole project can be deployed via a Cloudformation template. Figure 1.0 shows the main architecture of the project.

Figure 1.0: Architecture Customer Satisfaction Project

Figure 1.0: Architecture Customer Satisfaction Project

There are two main parts of the project. The first part deals with data cleansing and training the models. The second part deals with deploying a REST API to make realtime predictions from a web application.

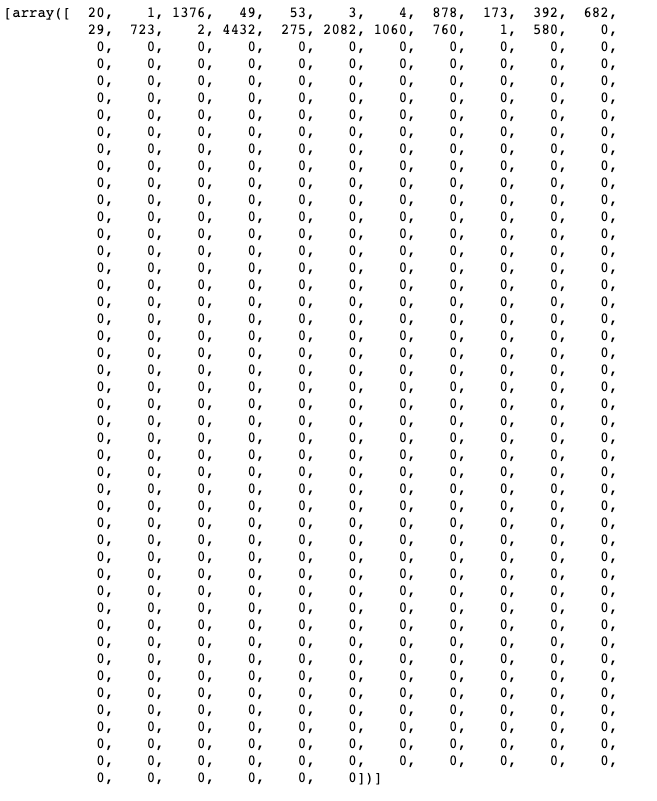

The first part of the project started with the preprocessing of data. The data was downloaded from the Stanford servers and split into train and test parts. It was a 50/50 split. The data wasn’t clean, an important step was to clean the data from HTML tags, to remove the stop words, and to tokenize the data. The very basic “ml.t2.medium” Sagemaker notebook instance was used. It took almost an hour to preprocess 50,000 entries. Since the machine learning algorithms accept only numerical data, the bag-of-words conversion method was applied. In bag-of-words each word is an input feature, the dimensionality of the features was limited to 5000 dimensions. Longer reviews were truncated, and shorter reviews with less than 5000 words were stubbed with a label of 0 for “no word” category. Figure 1.1 shows an numeric representation of an encoded test review: “The simplest pleasures in life are the best, and this film is one of them. Combining a rather basic storyline of love and adventure this movie transcends the usual weekend fair with wit and unmitigated charm.”.

Figure 1.1: Review Array Representation

Figure 1.1: Review Array Representation

All preprocessing steps were cached since each operation was timely and computationally expensive. Once all the preprocessing steps were executed the data was uploaded to S3. Uploading the data to S3 is a crucial step in AWS Sagemaker. Training and inference happen in a container. The container only contains the code for execution, while the data remains in S3. Sagemaker expects labels to be in the first column and input features as subsequent columns.

In Sagemaker the model comprises three objects: 1) model artifacts; 2) training code; and 3) inference code. An LSTMClassifier model was used to perform sentiment analysis. The input layer consists of 5000 input features and the output layer consists of one node. Sigmoid activation function was used to output the probability between 0 and 1. Because AWS Sagemaker does not have GPU-enabled instances as default, the initial training was done on CPU and took about two hours. To use GPU-enabled instances, a support ticket has to be submitted. The batch size was 50 and 10 epochs were defined for the training part. To test the model accuracy, 25,000 reviews were submitted to the Sagemaker endpoint. The accuracy rate was exactly 0.84216. It took approximately 20 seconds to submit 25,000 reviews and get the probability for each review. Figure 1.2 shows the final accuracy score.

Figure 1.2: Accuracy Score Sentiment Analysis

In the second and final part of the project the model was deployed as a web application. The whole web application contains the training, inference and REST API parts, and all parts can be automatically deployed via Cloudformation. AWS API Gateway was used as the REST API endpoint. AWS Lambda function has an IAM role to call Sagemaker’s endpoint for inference. The whole serialization and deserialization logic happens inside the Sagemaker inference container. AWS Lambda acts as a proxy between the Web API Endpoint and Sagemaker Endpoint.

Figure 1.3: Positive Sentiment Titanic

Figure 1.3: Positive Sentiment Titanic

Figure 1.4: Negative Sentiment The Godfather II

Figure 1.4: Negative Sentiment The Godfather II

Figure 1.3 and Figure 1.4 show the results from the deployed web application. As shown in the figures, the model can recognize negative and positive reviews. The API endpoint can act as a sentiment analysis tool to classify customer reviews in realtime. Another possibility is to use Sagemaker as a sentiment analysis tool for batch processes. By doing so, large volumes of data can be analyzed within a short period of time. Using either batch or real-time processing of data, any company is able to monitor the customer satisfaction KPI. Figure 1.5 shows customer satisfaction KPI. The metric can be used to optimize the product, support, and sales processes.

Figure 1.5: Customer Satisfaction KPI

Figure 1.5: Customer Satisfaction KPI

Conclusion

Machine learning helps companies to fully utilize big data without any human intervention. It reduces costs and helps companies to create metrics such as customer satisfaction to optimize internal processes. AWS Sagemaker offers a set of technologies that can be used to create and deploy an end-to-end machine learning pipeline that delivers high accuracy and speed of inference. The current project shows how companies can create better products and to monitor performance of existing products based on customer feedback.

Recommendations

During the project, no hyper-parameter optimization was performed. There are five parameters that can be tweaked to improve performance. The embedding dimensions, the hidden dimension, the size of the vocabulary, the batch-size and the number of epoch should be tested to improve the model’s accuracy. Dropout can be used to avoid overfitting. Besides LSTMClassifier, the XGBoost algorithm should be evaluated too. Since XGBoost was showing almost the same results. Another plus for XGBoost is that it can be less computationally expensive. Therefore tuning of XGBoost should be considered.

The code is not covered with unit tests. There are no further plans to do code refactoring or any other code optimizations. The project was developed during the Udacity ML program.